Web server security: Command line-fu for web server protection

Introduction

Adequate web server security requires proper understanding, implementation and use of a variety of different tools. In this article, we will take a look at some command line tools that can be used to manage the security of web servers. The tools reviewed will demonstrate how to perform tasks such as hashing strings in the Base64 hashing algorithm, hexdump for file analysis, gzip for file compressions and decompression, tcpdump for traffic analysis and several others.

Learn Web Server Protection

Overview

In order to securely manage web servers, you must be conversant with different command line tools. These tools also allow you to troubleshoot errors and perform file and traffic analysis. Let’s consider some tools and how we can use them to enhance the security of our web server.

Using curl for web server security

Curl is a tool that is used to transfer data with URLs and using various network protocols. Using curl, you are able to perform data transfer on many protocols. The data transfer is done without user interaction.

The following usage examples demonstrate how curl can be used on the terminal:

1. Downloading multiple files

You can be able to download multiple files using curl with the –O flag.

$ curl -O http://yoursite.com/info.html -O http://mysite.com/about.html

2. Resuming an interrupted download

Suppose in the process of downloading a file, your connection got interrupted. You can resume your download with the –C - -O flags.

$ curl -C - -O http://yourdomain.com/yourfile.tar.gz

3. Querying HTTP headers

The –I flag can be used to query the HTTP headers within a request

$ curl -I google.com

4. Downloading files without authentication

You can also download files without any authentication, using the command below.

$ curl -x proxy.yourdomain.com:8080 -U / user:password –O http://yourdomain.com/yourfile.tar.gz

Using OpenSSL for web server security

OpenSSL is a software library that uses Secure Sockets Layer (SSL) and Transport Layer Security (TLS) to perform encryption of communication.

This is how SSL/TLS works:

- The client makes a connection with the server. Once this is done, the client makes a request to the server for the supported cryptographic security.

- The server responds with the most secure option, by sending a certificate signed with the server’s public key.

- The client then verifies the certificate and responds with a secret key that it sends to the server. This secret key is encrypted with the server’s public key.

- The server and client then generate two pairs of public-private keys, then communication begins.

The OpenSSL software library is the most common software that can be used to implement the SSL/TLS protocol. We shall see below how Base64 encoding and decoding can be done using OpenSSL.

Using grep and egrep for web server security

The grep utility is one of the tools that can be used within Linux/Unix to search for anything, be it a file or a line within a file of text. This utility supports a variety of usage options such as searching using a pattern or reg-ex pattern, or even Perl-based reg-ex.

There are a couple of different implementations of grep. These include egrep (extended grep), fgrep (fixed grep), pgrep (process grep) and rgrep (recursive grep).

grep demands that you escape special characters when performing a search. This prevents the special characters from being included within the search response.

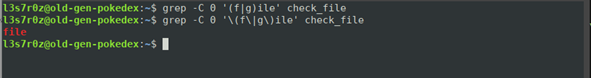

The screenshot below shows the output we receive when we search for the word “file” within our “check_file” file using grep.

We use the following command to have grep work correctly:

$ grep -C 0 '(f|g)ile' check_file

egrep is an extension of grep. It is a much faster alternative with an advantage — it processes metacharacters. This means that you no longer have to escape these characters before they are processed.

The screenshot below shows the output we receive when we search for the word “file” within our “check_file” file using egrep.

We use the following command to have grep work correctly:

$ egrep -C 0 '(f|g)ile' check_file

fgrep is a version of grep which is also quite fast for searching through files and strings. It does not recognize special characters whether they are escaped or not.

Using these examples, you can be able to search for keywords within files of code for specific characters or characters that conform to a particular regular expression. This is important in analyzing log files and searching through the directory for certain required files.

Using awk for web server security

You can use awk to perform text processing. This command is much more powerful than the sed command due to the many shortcomings of sed, but the sed command allows you to have more control of data.

You can effectively use awk to:

- Define variables

- Use string and arithmetic operators

- Use control flow and loops

- Generate formatted outputs

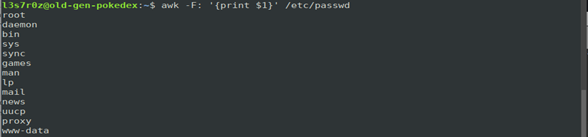

The following example shows how you use awk to process the text from the /erc/passwd file in order to obtain only the user names within it.

We used the command below:

$ awk -F: '{print $1}' /etc/passwd

From the screenshot above, you can see that we can collect users from the passwd file using awk. Output from awk can then be used as input for another command and so on.

The awk command above can be used to replace the second variable “Technology” with the replacement value “Security.” This ends up changing the string from “Information Technology” to “Information Security.”

We can also read a script from a file using awk, as shown below:

The screenshot above shows us using grep to read the contents of the /etc/passwd file and show the location of the home directory for every user.

Using jq for web server security

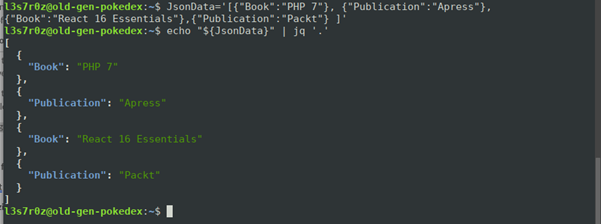

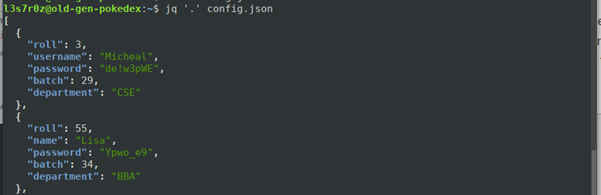

jq is used to view data within JSON files, by removing a particular key. The idea is basically the same as what we have previously seen with sed and awk.

Since this tool is not found by default within the Linux system, you have to install it using apt-get as shown below:

$ sudo apt-get install jq

You can format JSON data via the command line using jq, as shown below:

In the screenshot above, you have a variable called JsonData with some JSON information. When you echo out the contents of this variable and pipe it to jq, you get a nicely formatted output.

You can also read out a JSON file, such as a configuration file:

Working with JSON has never been simpler on the command line, thanks to jq. You can now add multiple keys, add single keys, delete keys and even map values to keys. For more about how to use jq to achieve these things, refer here.

Using cut for web server security

The cut command allows you to cut portions of text from files or piped data and print the result to standard output. You are able to specify parts of the text to cut either by specifying a delimiter, byte position or character. Consider the table of data below:

You are able to cut and display the first and third fields using the command below:

The output of this operation would appear as shown below:

You might want to cut text based on a delimiter that separates some portion of text. To do this, you would want to use the following command:

In the command above, the switch –d is used to specify the delimiter, which is in this case “:”. The output you would receive after the operation above is complete, as shown below:

There are numerous examples of how you can use the cut command, and you can refer to these here.

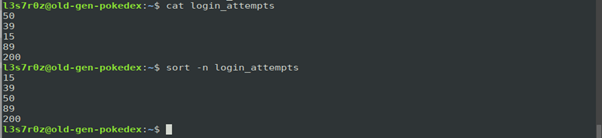

Using sort for web server security

The sort command is used to rearrange lines text in a file. This ends up with a sorted list. You can have this done alphabetically or numerically. The following rules apply during sorting of text:

- Lines that start with a number will appear before those starting with a letter

- Lines that start with a letter earlier in the alphabet will appear than those which appear later in the alphabet

- Lines that start with lowercase characters will appear before those starting with the same character uppercase

You can also sort files, not only text or data, within a list or file.

The example below demonstrates how you can use the sort command to sort a couple of login attempts from the least-logged attempt to the highest. We use the –n switch to tell the sort command to achieve this.

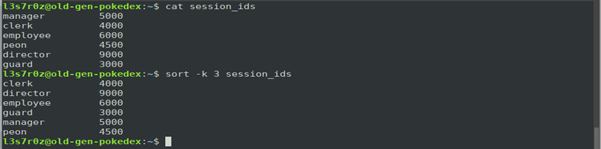

You can also sort data within a table based on the numbers in a column. The following table, for instance, contains session IDs. The table has three columns. See the table below:

The command used above includes the –k switch along with the wanted column, which is the third column. There are a variety of switches that can be used with the sort command. You can see them here.

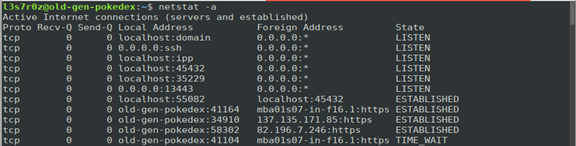

Using ss and netstat for web server security

netstat is a Linux/Unix utility tool that allows you to monitor network connections. Using this tool, you can be able to determine established connections, listening ports, routing tables, network statistics and multicast memberships.

By default, netstat will show a list of open sockets. If you do not specify address families, netstat will display a list of all the active sockets address families. You can be able to use multiple switches with netstat. Let’s consider some few examples.

1. Showing all open ports (-a)

You can view all the open ports and established connections by using the –a switch, as shown below.

The screenshot above will show the listening and established connections, along with the local and remote addresses (or hostnames).

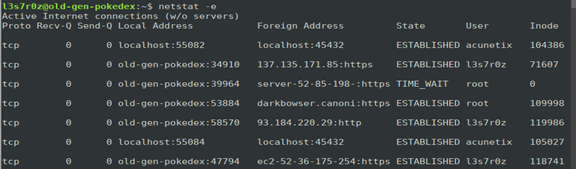

2. Showing network statistics (-e)

You are able to view the statistics for the sent and received packets along with the user that these statistics apply to, as shown below:

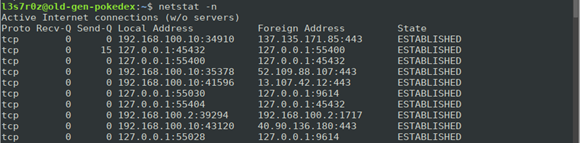

3. Showing numerical values of addresses and ports (-n)

You can display the numerical values of all data, including the local and foreign addresses.

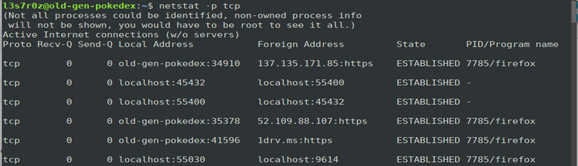

4. Showing statistics per protocol (-p)

You can display statistics per protocol, as shown below:

Note that you can specify any protocol of your choice with the –p switch. Just replace tcp in the example above with any of the following: UDP, TCPv6 or UDPv6.

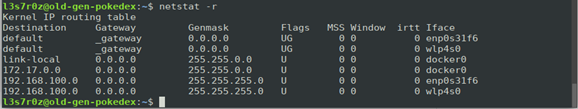

5. Showing the IP routing table (-r)

You can also display the IP routing table using the –r switch, as shown below:

There is a huge number of switches that can be used with netstat. You can find the others not demonstrated above by checking the usage examples here.

netstat has since been rendered obsolete and replaced with ss, which is similar to netstat. You can check out ss usage examples here.

Using tcpdump for web server security

tcpdump is a good tool that is used for troubleshooting network-related problems and can also be used for security analysis of packets within the network.

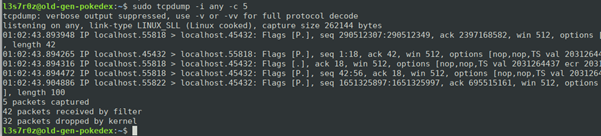

1. Capturing packets from the any interface

The any interface is able to capture packets from any active interface. In the example below, you can see that we have captured five packets using –c 5 and from any interface using -i any.

The example usage above is important when you are testing connectivity within your servers.

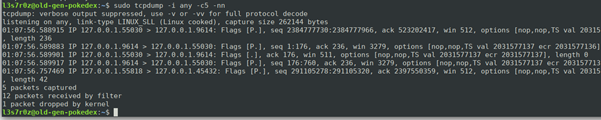

2. Capturing packets from the any interface and resolving IPs

When you capture packets, by default, you do not resolve IPs. Instead, it captures the hostname as shown above. You can fix this by issuing the –nn switch, which allows you to display the IP address within the capture, as shown below:

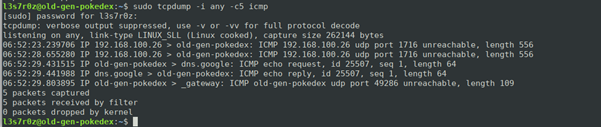

3. Filtering packets as per protocol

You can be able to filter packets by specifying the protocol, as shown in the screenshot below:

The importance of packet filtering can really be felt during troubleshooting network-related issues within your server.

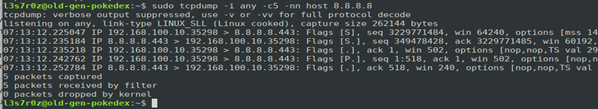

4. Capturing traffic to and from a specific host

You can also be able to capture traffic to and from a specific host. Consider the example below:

In the example above, a GET request was made to the google DNS server at 8.8.8.8.

There are quite a number of usage examples that you can find here if you’re interested in finding out more capabilities of tcpdump.

Using testssl.sh for web server security

In case you would like to test for SSL/TLS-related security, you might want to use the free and open-source script called testssl. This script allows you to check encryption-enabled services for supported ciphers, protocols and cryptographic flaws. Installation instructions can be found on GitHub here.

1. Checking for server defaults, headers, vulnerabilities, etc.

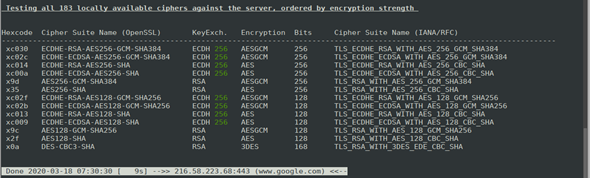

You can check each local cipher remotely using the –e flag. This will take time, so adding the –fast flag runs the check a bit faster. The following command was used:

./testssl.sh -e --fast --parallel https://www.google.com/

The following screenshot shows you what to expect:

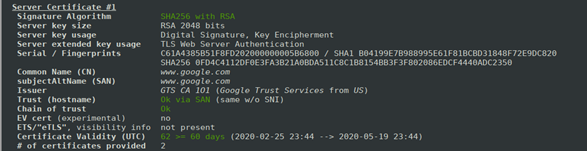

2. Checking the default server pick and certificate

You are able to check the default server certificate and pick by using the command below:

./testssl.sh -S https://www.google.com/

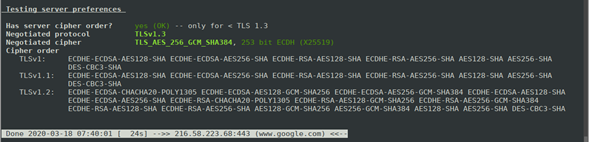

3. Checking the default server protocol and cipher

The default server protocol and cipher can be determined:

./testssl.sh -P https://www.google.com/

You can be able to access the testssl.sh help file by using the –help flag with the following command:

./testssl.sh --help

Using Base64 for web server security

Base64 is an encoding scheme that is normally used to represent binary data in an ASCII string format. You can use this encoding scheme to encrypt text or files within your server. There are a couple of usage examples that can be used on the terminal to either encode or decode data. We shall consider one of these numerous examples that is the base64 command.

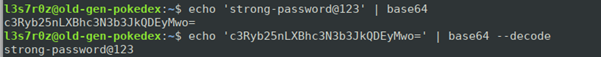

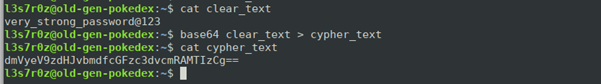

1. Bash encoding and decoding text using the base64 command

You can either encode or decode a string of text using the base64 bash command, as shown below:

The usage above involves piping a string into the base64 command, whether it is cleartext or a base64 cipher text.

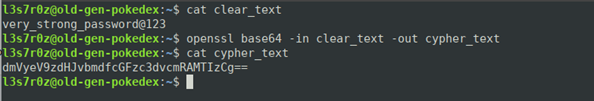

2. Bash encoding and decoding files using the base64 command

To encode or decode a file, you simply run base64 against the file and redirect it to a new file, as shown below:

The resulting file is base64-encoded.

You are not only limited to base64. OpenSSL can give you the encoding and decoding capabilities of base64 as well.

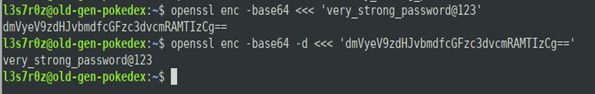

1. Base64 encoding and decoding text using the OpenSSL command

The OpenSSL command can be useful when performing encoding and decoding of text. You simply pass to OpenSSL the enc and –base64 options, then redirect text to standard input. See below:

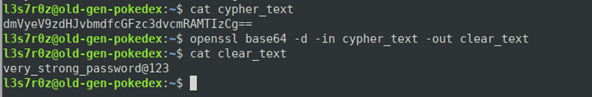

2. Base64 encoding and decoding files using OpenSSL command

You are also able to encode files into base64. To do this, specify the input file via the –in flag and the output file via the –out flag. This can be seen below:

To decode an encrypted file, you can use the –d flag along with the –in and –out flags as shown below:

Using hexdump and xxd for web server security

hexdump is one of the many tools that are able to represent data in various formats such as hexadecimal, octal, ASCII and decimal. You can use this tool to analyze files within the server for text or certain information. hexdump takes in input or files and converts this into the output format of your desire. The first column in the screenshots below is always the offset.

1. Hexdump canonical hex+ASCII display

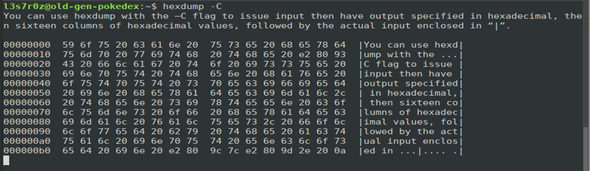

You can use hexdump with the –C flag to issue input then have output specified in hexadecimal, then sixteen columns of hexadecimal values, followed by the actual input enclosed in “|”.

2. Hexdump two-byte decimal display (-d)

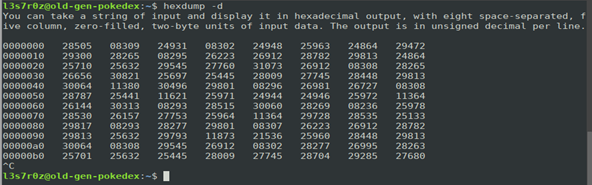

You can take a string of input and display it in hexadecimal output, with eight space-separated, five-column, zero-filled, two-byte units of input data. The output is in unsigned decimal per line.

3. Hexdump two-byte octal

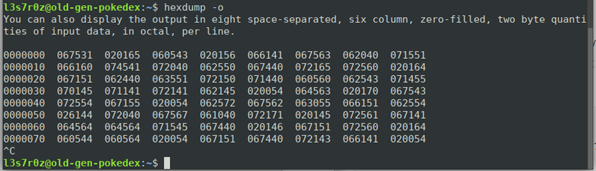

You can also display the output in eight space-separated, six-column, zero-filled, two-byte quantities of input data, in octal, per line.

4. Hexdump two-byte hexadecimal

You can also display the output in eight space-separated, four-column, zero-filled, two-byte quantities of input data, in hexadecimal.

We cannot be able to fully demonstrate the entire usage of hexdump, so you can type man hexdump on the terminal to see the full description of this utility.

Using gzip and libzip for web server security

The gzip command allows you to compress files and folders into the .gz file format while maintaining the original file mode, ownership and timestamp of the file or folder. By default, the original file will be deleted when you invoke gzip. The following examples show you how to use gzip.

1. Keeping the original file

You can compress a file and choose to keep the original file by using the –k flag, as shown below:

Notice above that the original properties of the original PDF file are preserved.

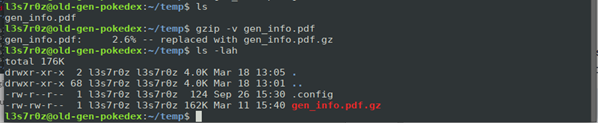

2. Performing verbose output

You can also perform verbose output:

You can see above that the original PDF file is now deleted as the compressed file is being generated.

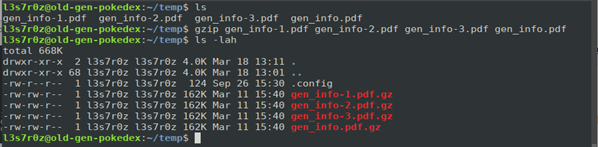

3. Compressing multiple files

Compressing multiple files is quite easy. Just pass all the files to be compressed, and they will be compressed and originals deleted. To preserve originals, just use the –k flag.

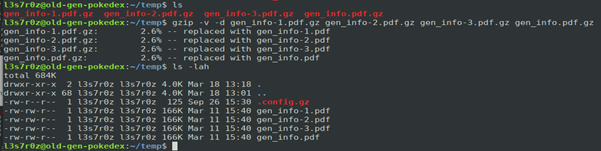

4. Decompressing files

You can decompress files by using the –d flag:

Notice above that we originally had compressed files which we decompressed to obtain the originals. We are then left with the PDFs.

To view other usage options, you might want to check the man pages by typing man gzip on the terminal.

Conclusion

In this article, we considered some tools that you can use for the security of your servers. We have discussed the basics of these tools and seen how we can manage text and text files such as logs, analyzing files, encrypting text and files and compressing and decompressing files.

We encourage you to proceed and find tools that you can use to achieve similar results. We hope you will find these tools useful in securing your servers.

Learn Web Server Protection

Sources

- OpenSSL: Here’s What You Need To Know About SSL and TLS Now., WhoIsHostingThis?

- What’s Difference Between Grep, Egrep and Fgrep in Linux?, TecMint

- 30 Examples For Awk Command In Text Processing, Like Geeks

- Bash jq command, Linux Hint

- Linux Cut Command with Examples, Linuxize

- What is netstat?, IONOS